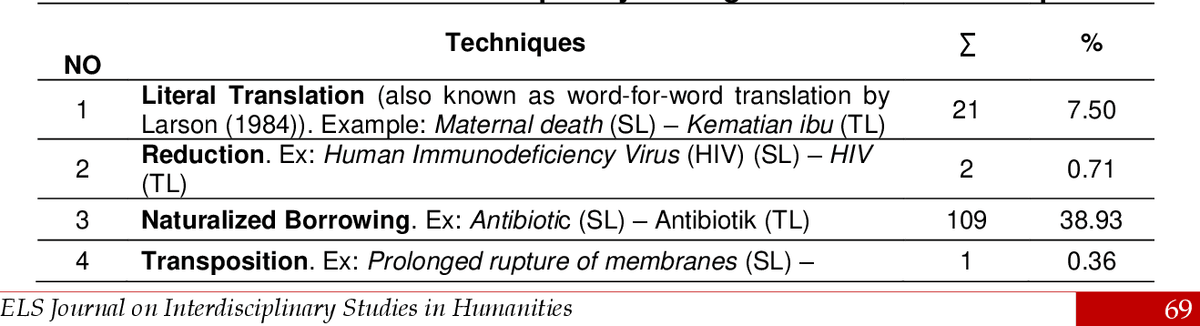

To understand the contribution of different components of our adversarial model,

Along with performing comprehensive ablation studies Than existing adversarial and non-adversarial approaches and is also competitive Extensive experimentations with high- and low-resource languagesįrom two different data sets show that our method achieves better performance Namely, refinement with Procrustes solution and refinement with symmetric Obtaining the trained encoders and mappings from the adversarial training, We use two types of refinement procedures sequentially after Puts the target encoders as an adversary against the correspondingĭiscriminator. Regularization terms to enforce cycle consistency and input reconstruction, and

To it that yield more stable training and improved results. In this article, we investigate adversarialĪutoencoder for unsupervised word translation and propose two novel extensions Recent work has shown superior performance for non-adversarial methods in moreĬhallenging language pairs. Parallel data by mapping monolingual embeddings to a shared space. Adversarial training has shown impressive success in learningĬrosslingual embeddings and the associated word translation task without any Role in many downstream tasks, ranging from machine translation to transfer Crosslingual word embeddings learned from monolingual embeddings have a crucial